The results of the MITRE Engenuity ATT&CK Evaluation of the Wizard Spider and Sandworm adversaries were officially released1last week. We are very proud of the Malwarebytes EDR results in the MITRE Engenuity test, which are the direct reflection of a relentless core EDR team and the learnings from participation in prior MITRE Engenuity testing rounds.

MITRE Engenuity provides the results in a structured format which allows for deeper understanding of the products being tested. Thanks to this level of reporting, we can see how well each product is prepared to detect, and ideally prevent, attacks by advanced adversaries.

The MITRE Engenuity data also allows anybody to determine the level of visibility and the level of analytics coverage of each EDR product tested. But it allows much more than that. Based on the data, anybody can also derive the level of configuration required for best detection, the level signal to noise ratio, the level of investigation needed to understand and act on alerts, and many more things.

As a summary of our analysis of the data so far, we believe that the MITRE Engenuity results back up our claims that Malwarebytes EDR:

- Needs little or no need to customize configurations

- Provides best analytics coverage, useful alerts, and high signal-to-noise ratio

- Is effective at preventing advanced attacks

MITRE Engenuity ATT&CK Emulations 101

MITRE Engenuity replicates well known hacking attacks, by reconstructing the various steps of attacks on enterprise networks, from the initial compromise to the exfiltration of sensitive data and persistence. In the MITRE Engenuity Round 4 evaluation, the attacks replicated were those of the cybercrime groups Wizard Spider and Sandworm. Each attack step includes several sub-steps that go in-depth into how the attack is carried out. The higher the number of sub-steps identified by the security software, the greater the visibility of that attack step and thus the possibility for the product and a customer to identify and react to the attack.

Prior to the 4 days of intense testing, vendors are allowed to configure and deploy their products to their optimum settings. After the emulation is carried out by MITRE Engenuity on the victim machines, vendors need to show MITRE Engenuity how their product has performed against each sub-step of the attack. For each sub-step, vendors can showcase “No detection,” “Telemetry detection,” “General detection,” or higher quality detections such as MITRE Engenuity-mapped Tactic and Technique detections. MITRE Engenuity gathers details of every little step of the way during investigation and reporting of findings.

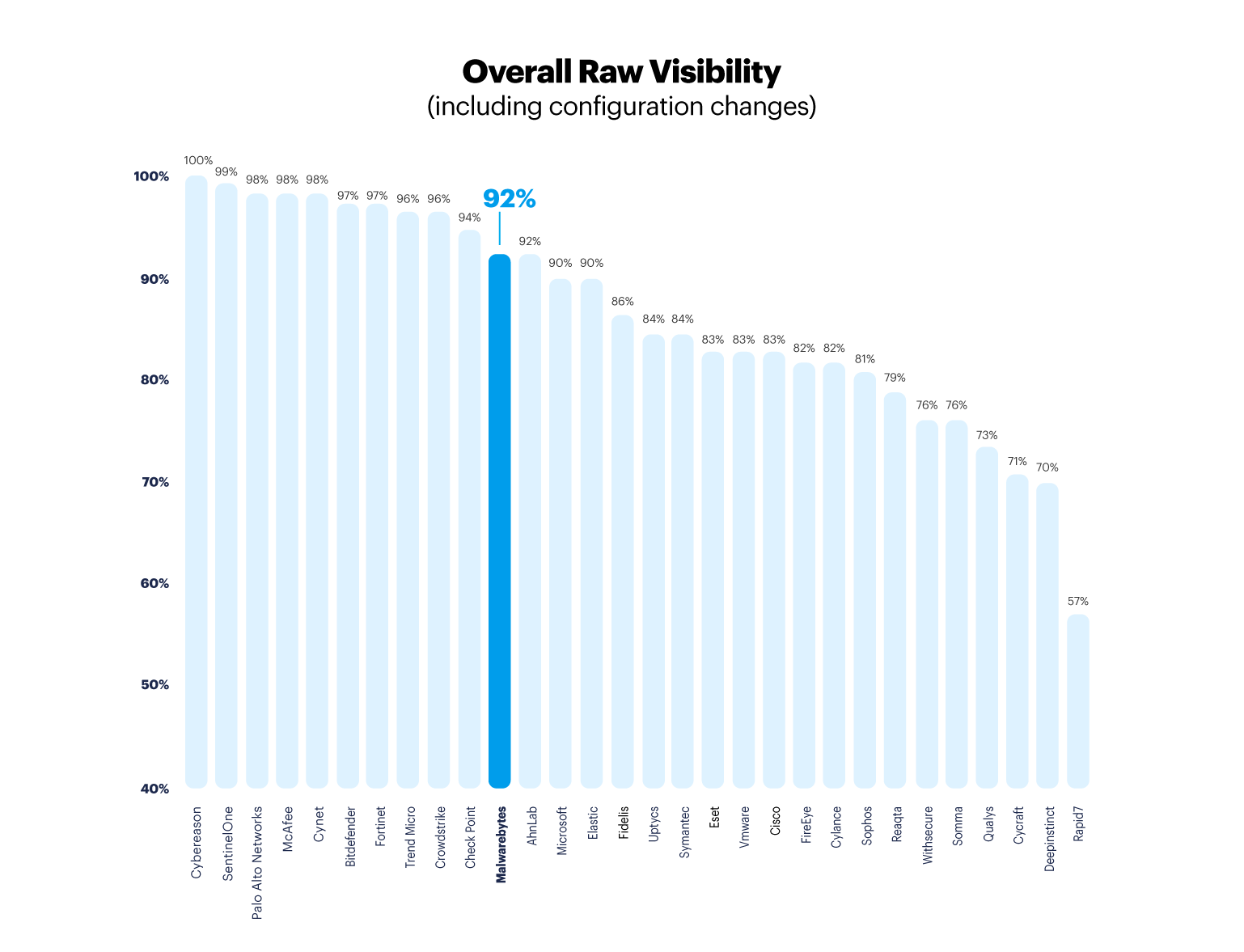

Overall raw visibility results

The MITRE Engenuity ATT&CK Evaluation of Wizard Spider and Sandworm involved 109 sub-steps altogether. The emulations which focus on the Windows platform account for most of the steps, i.e. 90 out of the 109 sub-steps are exclusive to Windows OS. The remaining 19 steps are carried out in the Linux platform. Vendors are evaluated based on the number of detections over the corresponding number of sub-steps for the platforms they participated in. For vendors who did not participate on the Linux emulation, the total number of sub-steps is 90. For vendors who participated in in the Linux emulation, the total number of sub-steps is 109.

Malwarebytes did not participate in the Linux test because our EDR product for Linux was not yet available during the MITRE Engenuity evaluation in October 2021. However, Malwarebytes EDR for Linux is available in beta today with similar detection capabilities as the Malwarebytes EDR for Windows agent.

The following “overall raw visibility” ratios are based on the corresponding number of sub-steps for each vendor. When reviewing the results or calculating scores, pay attention to the total number of sub-steps (90 or 109) to ensure accurate scores.

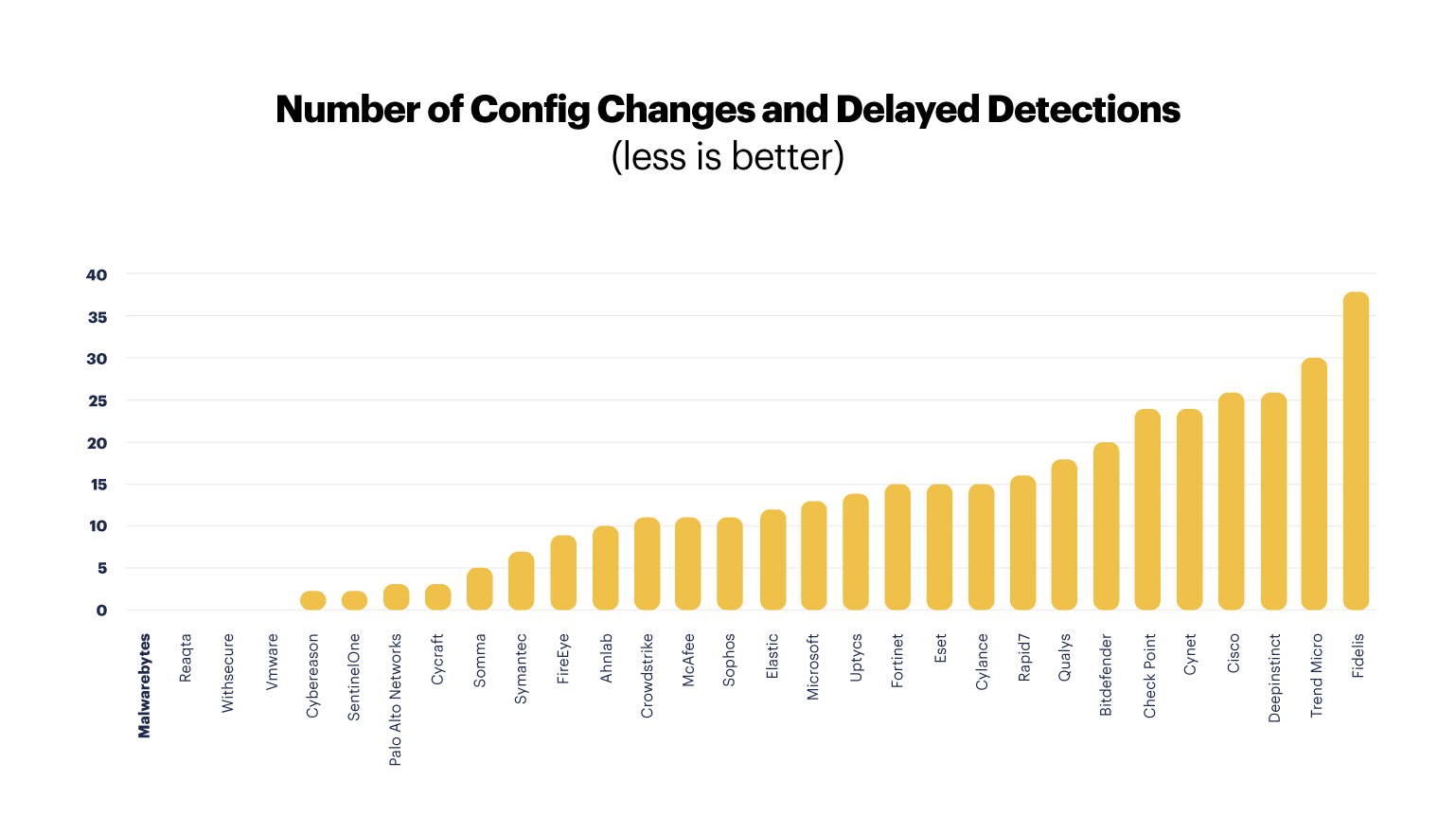

A very important note about Modifiers and “Configuration Changes”

During the evaluation vendors cannot change the configuration of the tested product, as this would have affected the accuracy of the results. However, MITRE Engenuity does allow configuration changes to be made to the EDR product if the vendor can provide better, higher quality details for a specific sub-step, or after a miss of a specific sub-step. Sub-steps which are detected by the product after a configuration change are marked with a “Config Change” modifier. These change modifiers allow us to better understand the limitations of each product to deal with attacks from advanced adversaries. These modifiers indicate which changes, tweaks, or manual detections the vendor added during the test in order to be able to detect a specific sub-step which was not detected to the vendor’s liking by the product the first time it was tested. Based on the MITRE Engenuity methodology2:

- The vendor is allowed to perform changes to the product such as obtaining telemetry from alternative data sources, which may not yet be readily available to the typical enterprise customer. These modifiers are labeled as “Config Change (Data Source)”.

- The vendor is allowed to create or provide a higher quality detection by modifying the detection logic and triggering new alerts. These modifiers are labeled as “Config Change (Detection Logic)”.

- The vendor is allowed to change the UI of the product to provide better mapping to MITRE Engenuity Tactics and Techniques. These modifiers are labeled as “Config Change (UX)”.

- The vendor and product may trigger an alert after a delayed period of time, typically because of a manual submission to a sandbox by the EDR operator, or from a Managed Detection and Response (MDR) team. These modifiers are labeled as “Delayed Detection”

Vendors are allowed to make use of Configuration Changes and Delayed Detections after the first pass of testing with the original config.

At Malwarebytes we believe any EDR product should strive to be easy to use out-of-the-box and without requiring advanced configuration, especially given the high demand and low supply of specialized IR personnel.

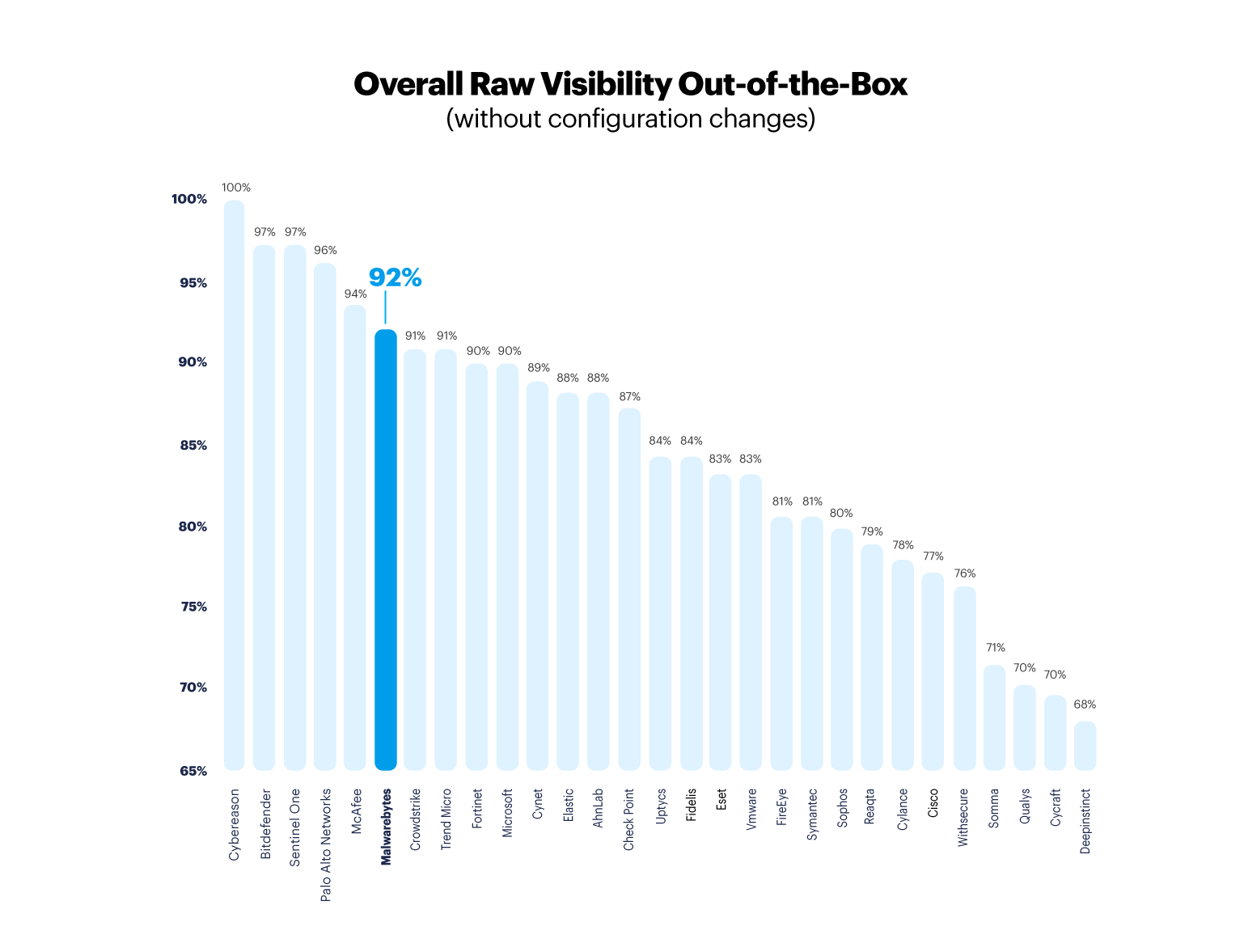

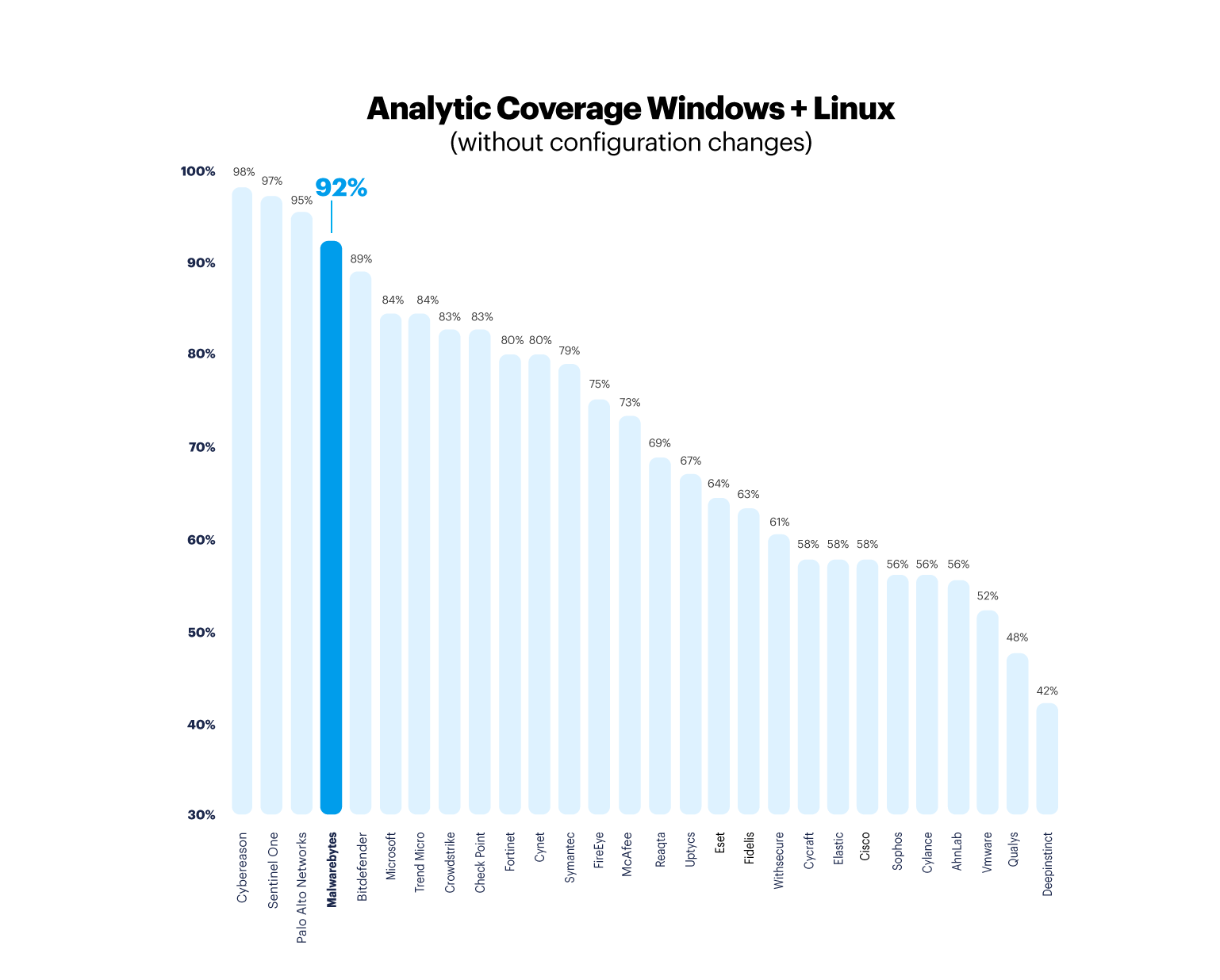

Overall raw visibility results without configuration changes

We wanted to get an interpretation of the data which would represent what an out-of-box experience would be for a typical customer. Therefore, in the following data analysis we discard all detections from any vendor which are the result of a Configuration Change. Config Change detections are not representative of the typical experience that a customer would have with the EDR product. These detections derive from the vendor itself re-configuring the product to detect something that wasn’t detected during initial MITRE Engenuity testing.

For the following graph, higher quality detections such as Technique are downgraded to Telemetry detections if they are the result of a “Config Change (UX)” or “(Detection Logic)”. Telemetry-only detections are discarded if there is an associated “Config Change (Data Source)” modifier.

Our parser3is available to replicate this analysis of discarding Configuration Changes and Delayed Detections. If we discard Configuration Changes and Delayed Detections during the test, then the “overall raw visibility” results vary not so slightly:

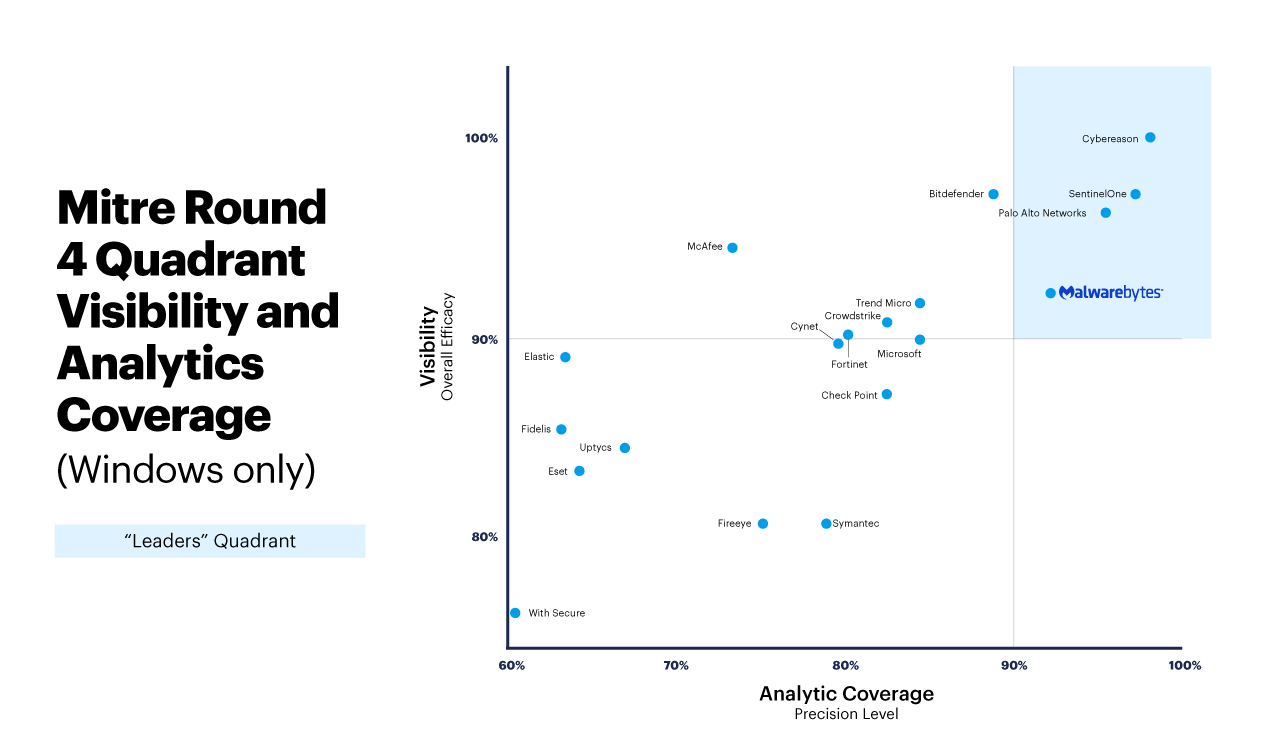

The MITRE Engenuity Analytics Coverage—a focus on detection quality

The above approach which looks only at “overall raw visibility” is the quickest, although also probably the most incorrect way, to interpret the data. Looking only at “overall raw visibility” does not take into account aspects which are critical to understanding the quality of the EDR product being evaluated.

MITRE Engenuity provides a much more interesting and useful datapoint to determine EDR detection quality, which is “Analytics Coverage”. As defined by MITRE Engenuity, Analytics Coverage is “the ratio of sub-steps with detections enriched with analytics knowledge (e.g. at least one General, Tactic, or Technique detection category)”.

An Analytics Coverage index highlights which detections are higher quality detections which make it easier for the user or practitioner to act upon and to initiate response and remediation actions. By contrary, too many detections deriving from “Telemetry” events or delayed detections which were manually added later by the vendor are indicative of EDR solutions which require large, specialized teams to operate.We believe a quality EDR product should be quick to identify and highlight the root problem of each incident in an easy-to-understand manner which facilitates response and remediation. If we focus on the Analytics Coverage indicator of quality alerts for each vendor, the results vary considerably:

MITRE Engenuity results for Windows

The MITRE Engenuity test involved 109 steps altogether, of which 90 were executed in the Windows OS platform. In this section we will analyze the results of each vendor against the Windows attacks only.

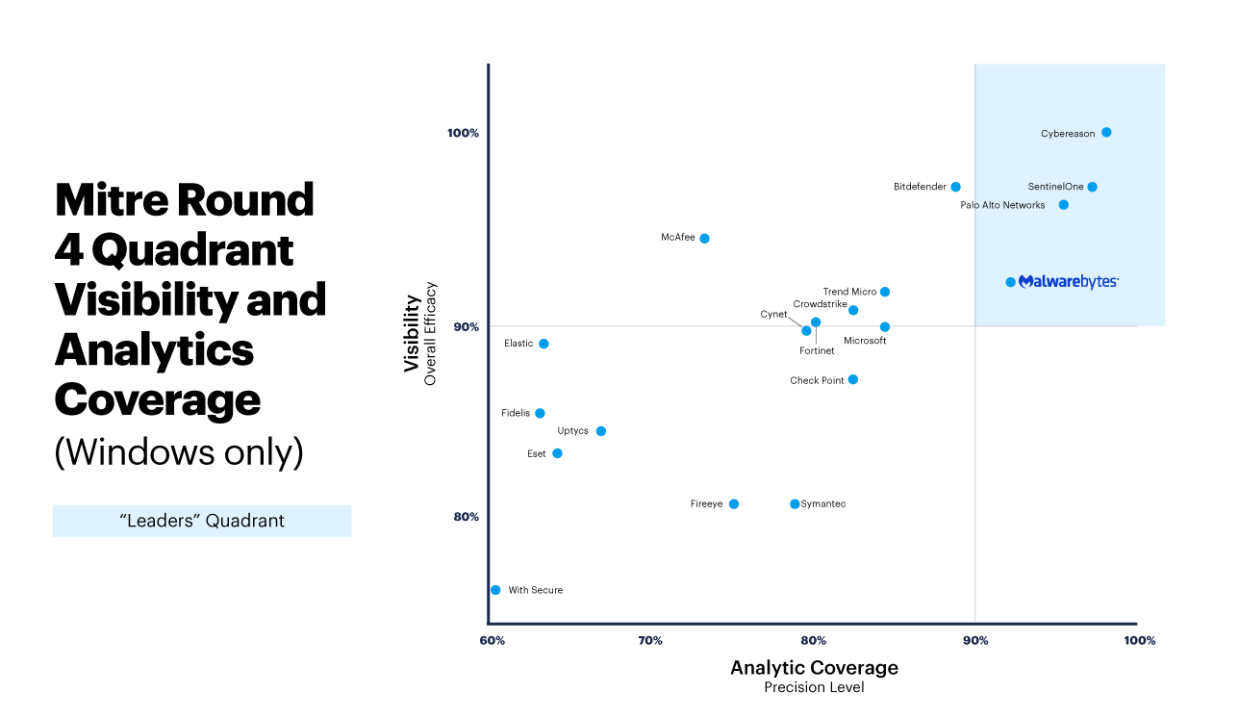

We plot both the “Overall Raw Visibility” and “Analytics Coverage” datapoints from the above paragraphs into a quadrant to see where each EDR products’ capabilities fall within these dimensions.

Just like before, we discard detections which come from a Configuration Change or Delayed Detection due to these being considered “misses” by us at the time of the test, and not representative of the customer experience without a specialized and dedicated SOC.

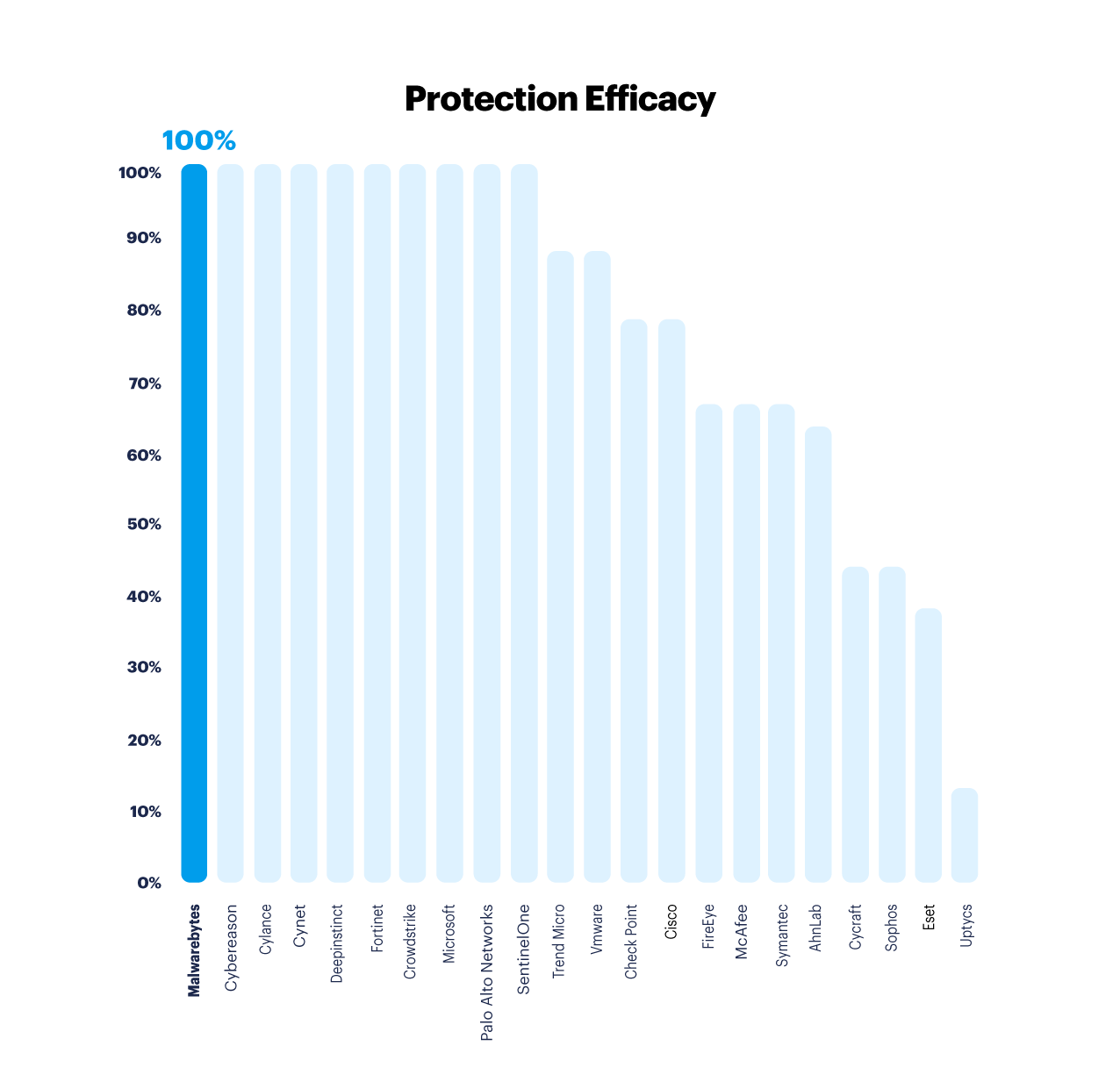

Protection

While all EDR products should be able to DETECT, not every EDR product has the ability to PREVENT advanced attack tactics.

Prevention and real-time blocking of advanced attack tactics is a delicate matter which involves balancing effective real-time protection through advanced exploit mitigations, AI/ML, behavior monitoring, sandboxing, and heuristics on one side, and minimizing conflicts and the need for highly specialized configurations and tuning on the other side.

We are very glad and humbled to be amongst good company in this challenging test.

Summary

Malwarebytes is one of the very few companies during the MITRE Engenuity Round 4 Evaluation who did not need to make any Config Changes to trigger quality detections, and at the same time achieved some of the top scores in visibility and analytics. This points to Malwarebytes as one of the top EDR leaders.

We believe that the MITRE Engenuity Round 4 results are a true representation of the Malwarebytes EDR strengths as a better out-of-the-box solution:

- Little or no need to customize highly specialized configurations

- High quality alerts and signal-to-noise ratio

- Effective not just at detecting, but also preventing advanced attacks

MITRE Engenuity does not offer its own interpretation or ranking of the test results. But if we were to apply our own interpretation of the results to a ranking methodology and framework similar to those used by testing organizations AV-Comparatives, MRG-Effitas, etc., it could look something like this:

| Leaders | Contenders | Challengers |

|---|---|---|

| Cybereason | Microsoft | Check Point Software |

| SentinelOne | Trend Micro | Symantec |

| Palo Alto Networks | CrowdStrike | FireEye |

| Malwarebytes | Fortinet | McAfee |

| Cynet | ReaQta | |

| Cylance** | Cisco | |

| VMware Carbon Black** | AhnLab | |

| CyCraft | ||

| Sophos |

| Leaders Ranking Criteria* | Contenders Ranking Criteria | Challengers Ranking Criteria |

| 90%+ analytics coverage out-of-the-box (without config changes) 90%+ Protection scores | 80%+ analytics coverage out-of-the-box (without config changes) 80%+ Protection scores | 40%+ analytics coverage out-of-the-box (without config changes) 40%+ Protection scores |

* The ranking criteria could be stricter if instead of looking at e.g. “90%+ analytics coverage” we set the criteria of “90%+ Technique coverage”, as suggested4by Josh Zelonis. We fully agree with Josh’s point of view that the market needs to evolve to using Technique detections as the most important metric.

** Vendors that achieve the CHALLENGERS criteria but who also achieve 80%+ protection rates get bumped to CONTENDERS since we believe that effective prevention is more cost-effective than detection.

*** Vendors shown are listed in order of higher Analytics Coverage. Not all vendors who participated in the MITRE Engenuity Round 4 evaluations are included in the ranking above. Those that didn’t participate in the Protection test and/or who achieved low analytics coverage or protection scores may not fit the ranking criteria and thresholds as defined above.

This post was authored by Bogdan Demidov, Marco Giuliani, and Pedro Bustamante.